#9 - How we evaluate engineering performance at Gusto | Eddie Kim, co-founder at Gusto

You'll read this guide with great Gusto.

Hey friends, welcome to the ninth edition of our Engineering Leadership in-depth guides. Today, we invited Eddie Kim, co-founder & Head of Technology at Gusto, to share his experience evaluating engineering performance.

If you’re new here, check out our previous articles:

How we do Platform Engineering at Microsoft Viva Engage (Yammer) | Diego Quiroga, Principal Software Engineering Manager at Microsoft

How to Land a Board Position | Simi Gupta, Sr Director of Engineering at Procore

Evaluating Team Effectiveness with a Data-first Approach | Bhavini Soneji, Executive Leader (ex-Cruise, Headspace, Microsoft)

Webflow's Playbook for Hiring Engineers in the AI era | Akash Jain (Senior Engineering Manager at Webflow) & Sargun Kaur (CEO at Byteboard)

Unpacking Okta's Tech Architecture | Monica Bajaj - VP Developer Experience & Mark T. Voelker - VP Architecture at Okta

How We Drive Developer Productivity at Yelp | Kent Wills, Director of Engineering, Engineering Effectiveness at Yelp

How to avoid the Migration Trap | Bruce Wang - Director of Engineering at Netflix

How we increased execution by 10x at Coda | Oliver Heckmann - Head of Engineering at Coda

Don’t forget to subscribe to never miss an article and get a chance to win your ticket for Elevate’24, worth $1,200. We’ll pick 10 random winners among our Substack subscribers on March 21st.

Let’s go!

Edward Kim, from Y Combinator to Unicorn status

We’ll start by sharing a few impressive metrics about Gusto:

#1 product for small businesses in 2024 (G2)

#1 place to work in San Francisco

$9.5B valuation

$500+ million in revenue

300,000+ businesses using Gusto

Founded in 2012, Gusto now caters to over 300,000 businesses throughout the US, handling hundreds of billions of dollars in payroll each year. Their all-in-one platform simplifies and automates payroll, benefits, and HR tasks, supported by expert advice.

Edward Kim is part of Gusto’s founding team, accompanying it from its inception at Y Combinator in 2012 to its current unicorn status. As its Head of Technology, he grew the engineering team to hundreds of employees. Before Gusto, Edward created Picwing, a photo-printing startup. He also worked at Volkswagen’s Electronics Research Lab as a senior project engineer, leading cloud navigation and voice recognition projects for Volkswagen and Audi cars.

Edward also made some popular Android apps that earned over $1 million. He holds a bachelor's and a master's degree in Electrical Engineering from Stanford University.

But beyond this impressive curriculum, Eddie — as we call him — is responsible for the strong engineering culture at Gusto, promoting values of diversity, quality, community, humility and trust. And not just on paper, Gusto was voted the #1 place to work in SF for a reason! You’ll often see him cheering for his team and giving back to entrepreneurs and engineers on LinkedIn.

How we evaluate engineering performance at Gusto

How do you build a world-class engineering team? This is a question I’ve spent a lot of time thinking about as the Head of Technology and co-founder at Gusto. One of the primary levers is to maintain a high bar for individual performance.

To do that, it’s critical to create a consistent but flexible system for evaluating performance. This system needs to recognize the unique strengths of different individuals, while collectively maintaining a well-rounded team.

In this article, I’ll describe how we think about measuring engineering performance today and share a few examples to help you apply these concepts in your own company.

First, I’ll share an overview of the overall performance evaluation process. We go through this process twice a year, once in the spring and once in the fall. Here’s how it works:

Employees are asked to share feedback with each other. If the feedback-giver gives permission, the manager is allowed to read it.

Each employee writes up their self-evaluation using the axes framework (which I’ll describe in detail in the next section).

Each manager writes up the evaluation for each of their direct reports using the axes framework. They are allowed (but not required) to use the self-evaluation write-up.

We have calibrations where each manager talks about each of their direct reports using the axes framework.

The framework I’m sharing started in Engineering. Eventually, the Product, Design, and Data teams followed suit. Their axes are largely the same as Engineering, but with some small nuances to account for the differences in their crafts.

Alright, let’s dive into the framework!

Engineering Performance Evaluation at Gusto Today: The 4 Axes of Engineering Performance

“We use four axes to evaluate engineering performance: Project, Better Engineering, People, and Better Organization.”

We use four axes to evaluate engineering performance: Project, Better Engineering, People, and Better Organization.

Each axis has five possible ratings (1–5). The sum of the ratings (“sum of impact” or the area in the polygon it creates) is what your overall rating is for that six-month cycle. The overall rating is what matters.

I like to think about it as a Pokémon approach—they have different skills and how strong they are at each skill. You could have different Pokémons that have different strengths. Collectively, they make a great team, which is pretty much how it works in an Engineering team as well.

Let’s take a closer look at each axis.

Project: This axis describes the direct impact you as an engineering IC are having on your actual deliverables. If you’re a manager, it’s about the impact of your team. Examples of Project impact are ideally described in terms of changes to customer behaviors (e.g., higher product usage, lower customer service cases, higher NPS). Project impact can also reflect learnings generated, creating product direction, and validating or adapting product decisions.

Better Engineering: This axis describes how you have improved the overall systems or toolchain in a way that increases engineer effectiveness. Examples of Better Engineering impact include lowering test time, error rates, and new hire ramp time. This axis becomes more important as you become more senior because you impact the overall engineering system at large.

People: The People axis shows the impact of helping others around you become more effective and the team healthier. A wide range of activities fall into this category, including hiring/interviewing candidates, mentoring/coaching, providing helpful feedback, and code reviews. Some of the metrics that can be used for People impact include team member engagement, representative and effective hiring, team health metrics, and qualitative feedback from team members and cross-functional partners.

Better Organization: This axis reflects the impact of making the broader organization healthier. If the previous axis, People impact, is something like spending a lot of time interviewing, an example of Better Organization impact is working to come up with new interview questions or a rubric that improves the overall hiring process. Some other examples include creating and driving diversity programs, and representing Gusto at external events.

Ways to Describe Impact: How Gusto Rates Each Axis

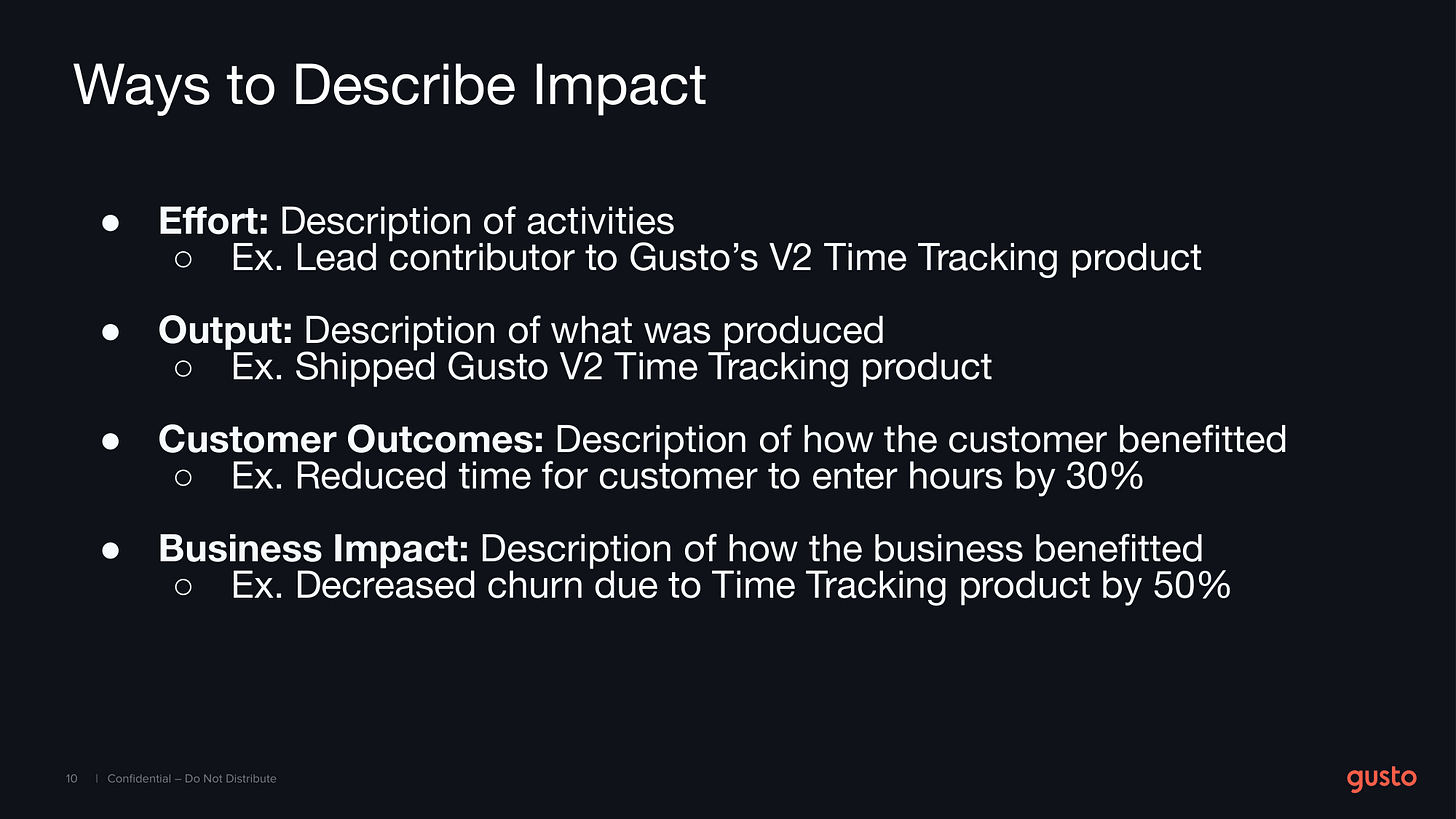

Now that we’ve looked at what we measure, let’s take a closer look at how we go about measuring it. For each axis, we can describe impact in four ways (in increasing order of preference): effort, output, outcomes, and business impact.

“What it means to receive a “Meeting Expectations” rating as an L1 is very different from what it means for an L6 to receive the same rating”

Effort is simply a description of activities and answers the question of how someone spent their time, such as “Lead contributor to Gusto’s V2 Time Tracking product.”

Output describes what was produced, such as “Shipped Gusto V2 Time Tracking product.”

Customer Outcomes describe how a customer benefited, such as “reduced time for customer to enter hours by 30%.” It’s worth noting that it can sometimes take more time to get these types of measurements. It might take six months to ship a product and another three months to get enough usage to definitively say what impact it’s had.

Business Impact describes how the business benefited from the work, such as “decreased churn due to Time Tracking product by 50%.”

We’re open to letting managers use any of these, but each has their pros and cons. Generally speaking, effort and output are easier to measure but don’t prove as definitively an impact on the business, while Customer Outcomes and Business Impact are harder to measure but more directly prove an impact on the business.

When it comes to the specific ratings for each axis, we have five: Gusto Exemplar, Exceeding Expectations, Meeting Expectations, Sometimes Meeting Expectations, and Not Meeting Expectations.

It’s also important to note that the bar for each rating is higher if you’re at a higher level. For example, what it means to receive a “Meeting Expectations” rating as an L1 is very different from what it means for an L6 to receive the same rating.

For every level and every axis, we have a description of what it means to “Meet Expectations”. Since we have 4 axes and 6 levels at Gusto, that means we have a total of 24 descriptions.

Guidance on Writing Performance Evaluations

When writing an engineer’s evaluation, we want to be concise and consistent. We guide managers to include the following:

Context: Team level, how long they’ve been at Gusto, and how long they’ve been on this team

Performance by axis: One or two bullet points per axis, including the rating and description

Overall rating: There’s no precise formula for this and we encourage managers to ultimately use their judgment based on the performance in each axis

Example writeup for iOS engineer

Here is an example writeup for an iOS engineer, using the four axes I introduced above.

Skyler E.

Context: iOS app team, L3, 14 months at Gusto and on the team

Performance by axis

Project impact - Exceeds expectations.

Led the build of EE time card, launched successfully in March, and is being used by 26% of target ERs (over a goal of 15%), reduced churn reason for time tracking app by 83%.

Drove a few information architecture decisions in the last half that improved usability significantly. These improvements led to higher feature discovery in this half in a way that was not anticipated or credited in the last half. Earned exceeds by overshooting the product goals and the key outcome metric.

Better engineering - Meets expectations.

Helped reduce build failures by 30% along with Jess K.

Earned meets by showing a scope of impact expected from a tech lead.

People impact - Exceeds expectations.

Active referrer and interviewer. Directly involved in engaging and closing two engineers this half. Onboarded Sam, a new iOS engineer to the team and got strong feedback (Sam ramped up faster than expected and is performing well).

Mentors two iOS engineers on other missions. Consistent peer feedback around the impact of their mentoring, code reviews, and coaching. Earned exceeds by having an outsized impact and being a key to overall team health.

Org contributions - Meets expectations.

Mostly contributing to the iOS community across the org. Facilitated a couple of lunch-and-learn events. Helped set up a mentoring program for iOS engineers.

Earned meets by doing impactful work but they’re not yet shining in this area. Good growth focus area.

Overall rating - Exceeds Expectations.

Strong contributor overall, higher impact than expected both for business impact and the people axis. Strong trajectory towards L4.

Additional Considerations: Required Axes, Calibration, and Promotions

“At a certain level, some axes become “required,” which means that you must be at least a “Meets Expectations” in that axis for the other axes to kick in.”

Now that you’ve seen the framework and an example performance evaluation, let’s look at a few of the finer details.

Required axes

At a certain level, some axes become “required,” which means that you must be at least a “Meets Expectations” in that axis for the other axes to kick in. Another way of saying this is if you get a “Sometimes Meets Expectations” in a required axis, your overall rating is capped at “Sometimes Meets Expectations”—no matter what your ratings are in other axes.

For example, Better Engineering is a required axis at L5 and above. The reasoning for this is that once you get to L5, we want you to spend time improving the overall engineering system. Similarly, anyone who manages other people has People impact as a required axis. Project impact is required for everyone at all levels because that’s the bare minimum of being effective in any role.

Calibration

I mentioned earlier that during the calibration process, each manager talks about each of their direct reports using the axes framework. We consider this a process for calibrating the manager, not the employee! Our goal is to ensure that managers are assessing performance in the same way. During this process, we gather all the managers and ask them to share what they’ve written for each of their reports. We allow two minutes for reading and three minutes for discussion for each person. Through this process, managers align on a consistent standard of performance.

Promotions

When it comes to promotions, it's a two-way discussion between the manager and their direct report. Ultimately, the manager should only put the employee up for promotion if they think that they are ready.

To determine if someone is ready for a promotion, we will recalibrate them at level N+1.

For example, if they’re an L2, we will first calibrate that person with all the L2s and then again with the L3s. If they are calibrated at least in the middle of the pack for L3, they’ll be promoted. In the cases where they fall below the middle of the pack, they won’t be promoted and we’ll provide them with clear feedback as to why not.

Accounting for the impact lag

“We need to be cognizant of not inadvertently penalizing people for taking on work that takes a long time to complete”

In engineering, there’s often a lag between the work we do and the impact it has on our customers or business. This can make it difficult for us to measure the impact of engineers’ work in a timely manner. Our philosophy is that we want to credit people for the work once we have a reasonable idea that it is generating impact. Put another way, we want to avoid giving credit for work before we have some level of confidence around its impact.

We need to be cognizant of not inadvertently penalizing people for taking on work that takes a long time to complete. The typical way for handling that case is to credit the individual for hitting or exceeding milestones on the route to long-term goals.

Final Thoughts

My goal in walking you through this is to provide you with a framework you can potentially take and adapt to your engineering team. It’s a system we’ve seen a lot of success with at Gusto.

At the same time, I’d say it’s important not to be overly dogmatic or precise about the axes system. With this approach, it’s important to consider both quantitative and qualitative factors. Not everything can be represented in a number or data point.

Ultimately, this is just one tool to evaluate performance. This particular tool requires a lot of judgment and trust in the managers to arrive at the rating for the engineer, so that’s something to keep in mind if you’re considering this particular approach.

Liked the article? Press the below button to help us spread the word!

And if you want to help us even more, answer this 1-question survey:

Cheers,

Quang & the Plato team.