#19 - How we built our AI/ML Platform at DoorDash | Sudhir Tonse, Sr Director of Engineering

Lessons about Machine Learning, from a Learning Machine.

Hey friends, welcome to the 19th edition of the Plato Newsletter!

Elevate 2024 is… next week 😱 and we are both nervous and excited. Nervous because so many things need to fall into place for those 2 days and excited to meet you all! But for our matters at hand, we’re thrilled to invite Sudhir Tonse, Senior Director of Engineering at Doordash to share the principles they use to build the AI/ML platform at Doordash.

Sudhir Tonse, from pre-IPO to scale

Sudhir has the habit of picking companies in their most interesting periods (according to him of course): Netflix during their transition from DVD to Streaming and On-Prem to Cloud, Uber from its Pre-IPO days to scale, DoorDash from its pre-IPO days to its current scale/expansion).

As Director of Engineering at Doordash now, his focus has been building the platform that powers Doordash’s AI/ML capabilities and with the recent focus on AI, we asked him to tell us more about his process to keep the tech afloat in a rapidly evolving environment. It led to this great keynote at Elevate 2023, starting point for the blog post that follows.

You can follow Sudhir Tonse on LinkedIn.

How we built our AI/ML platform from the ground up

Artificial intelligence (AI) and machine learning (ML) are on everyone’s minds these days. But for some of us, these are themes we’ve been addressing in our careers for quite some time already.

I’m Sudhir Tonse and I’m the Senior Director of Engineering at DoorDash, which I joined in 2019 to oversee the data platform. Prior to DoorDash, I worked on marketplace and data at Uber and the cloud platform at Netflix.

I imagine you’re already familiar with DoorDash as a food delivery company, and we are that, but we consider our mission to be “empowering local economies.” This can include everything from local florists and grocery stores and convenience stores in addition to restaurants. Similar to Amazon Prime, we also offer a subscription service, DashPass. The slide below offers an overview of our different lines of business.

When I joined DoorDash in 2019, the company had already been in business for several years, but at the time, it did not have an AI/ML platform. My job was to investigate how AI and ML could help improve the business—and that’s the topic we’ll be covering here.

Why and how do you build your own AI/ML platform?

About a decade ago, Marc Andreessen came up with a popular saying, “Software is eating the world.” Most people did not realize what that meant at the time, although today it feels quite clear that software has indeed eaten the world.

A few years ago, he revised his statement to: “AI/ML is eating the software.” And now with gen AI, LLMs, etc., that’s exactly how it’s playing out.

In Silicon Valley, where many startups are based, we’re all asking what this means for our organization. What should we do about it? What should our AI/ML strategy be?

You can participate in this revolution with your own AI/ML platform if you so wish. And in the rest of this article, I’ll share some of my observations and advice for how to go about this.

3 principles to start, evolve, and scale your AI/ML platform

I’ve distilled my observations about starting, evolving, and scaling your AI/ML platform into three main lessons: know your game; dream big, start small; and get 1% better every day. Let’s take a closer look at each one.

Lesson 1: Know your game!

This might sound obvious, but the first step is to know your game. No matter how hard you practice soccer, if you’re invited to play in a basketball game, you’re very unlikely to come out a champion.

The way you can “know your game” is to learn everything you can about both the problem and solution spaces.

In the case of AI/ML at DoorDash, we could think of 100+ use cases before we started building the platform.

Each and every one of the problems we came up with presents an opportunity to use AI and ML. To break it down even further, we looked at the different stages of the food order lifecycle.

Then we began applying AI/ML use cases to each step, as shown in the slide below.

When a customer gets to the checkout stage, AI and ML can be used to predict ETA and for fraud detection.

In the third step, the ETA is also important. It’s a three-sided marketplace—every merchant and every Dasher (the term we use for our delivery people) has to benefit from the overall marketplace. We have to use AI/ML to understand how long it’s going to take to prepare and deliver something under circumstances we can’t control.

And dispatch optimizations—deciding which orders get assigned to which Dashers—is another interesting problem that I’ve had the opportunity to work on at both DoorDash and in my previous role at Uber.

So now you’ve got an overview of the problem space.

What about the solution space? This is our opportunity to come up with different methods, technologies, and tools to address the use cases we identified in the problem space.

For example, for predictions, time series forecasting is a likely solution. For search and discovery, we might use ranking models, and so on.

The question is: What tech stack are you going to use? This is not as simple as it sounds: There are thousands (if not more) of tools for every little thing you can think of, from data platforms, pipelines, and feature stores to observability and model deployment. The slide below hints at the complexity of this topic. To explore all these solutions in more detail, see this blog post.

If you’re starting to think about your own space, here are a few of my tips on how to evaluate different tools:

Start by agreeing on a framework of evaluation. For example, let’s take ML observability as a problem that needs a solution. What areas/features of ML observability are important (and what weight do you give to each of those features)? We’d then evaluate multiple solutions in that space.

Some of the considerations relate to vendor viability (the state of the startup, its maturity, community support, etc.). We usually like to ensure we have evaluated at least a couple of solutions before deciding on one to move forward with.

My advice is to avoid getting locked into any single tool. When building out your tech stack, I recommend dividing it into three buckets:

Fundamental long-living, strategic infrastructure (e.g. cloud provider)

Critical, very large scale part of your platform (e.g. stream or batch processing framework, model training framework/libraries, etc.)

Peripheral parts of your tech stack (e.g. migration tools, observability tools, cost attribution tools, etc.)

With a fundamental part of your tech stack like your cloud provider, it’s going to take massive effort to migrate from one tool to another (e.g. from AWS to Azure). So in this case, I’d say don’t worry so much about avoiding lock-in and just go with the provider of your choice.

But, when it comes to a vendor for data pipeline jobs (Spark, Flink, or any other stream/batch processor), we should retain a choice and strive to avoid vendor lock-in. In our case, we have ensured that we have the ability to run our Spark workloads on multiple compute frameworks (including Databricks, AWS EMR, etc.).

Step 2: Dream big, start small

For a platform team, which is what I run, our customers are internal customers. And outside of the technology part, you’re looking for three qualities in the team you partner with:

They’re willing to work with you

They have a really good use case

The rest of the company understands and believes in this use case as well

If you meet those conditions and you were to solve that use case, the chances are your platform would succeed. And if your platform doesn’t succeed in those conditions, it won’t be successful on the whole, either. So the idea is to divide it into two spaces: dream big and start small.

Dreaming big is about vision and bets. Let’s go back to the DoorDash vision. The vision is about empowering local economies. What does that translate to?

I’d boil this down to:

ASAP. I want my delivery now. While it was good enough to deliver something in an hour five years ago, now people are expecting it to be delivered as fast as possible. So the faster we deliver, the better off we are from a business perspective.

Selection of merchants: If you go to the app and you don’t find your favorite merchant, you’re going to take your business elsewhere. So we want to make sure that the selection exists.

Affordability: cost matters, especially during these days with inflation, layoffs, etc.

Again, dreaming big involves establishing a vision and direction of the platform. A vision statement is intentionally ambitious and abstract.

While you start by dreaming big, you also need to translate that dream into a concrete set of strategic investments. In our case at DoorDash, the vision was to build an AI/ML platform that is “best in class and scalable to a fast-developing business’s growing complex needs.”

The strategic decisions/investments we made were:

To build an in-house centralized ML platform because we feel that the field of AI/ML is advancing rapidly and it’s unclear which particular vendor solution will “win.” Back in 2019, for example, it was hard to know if PyTorch or TensorFlow would be the dominant model training framework of choice.

Focus on building a framework where we could plug and play various components (whether built in-house or bought from a vendor).

Focus on delighting our internal customers (and what they really cared about was a frictionless developer environment, developer productivity, etc.)

Another important part of dreaming big and starting small involves defining strategic bets: Pick a first use case, or what we refer to as a “hero use case” and deliver value quickly, which will enable you to create internal momentum for expansion.

The fact that there are hundreds of ideas shows that there is value in building a platform. Otherwise, if there were just a handful of use cases, the investment needed to build a platform would not be justified.

So how do you find your hero use case? It’s best to avoid a massive design phase that promises to “boil the ocean.” It’s better to start with a specific, contained, well-defined case. This is something that is not trivial and, when launched, will show a decent spectrum of the capabilities you seek to build and when deployed will be well received and acknowledged as a compelling case for a platform investment to be made.

I’d also recommend finding a case where you can exercise a fair set of the overall ML lifecycle (train, deploy, operate) and the support/needs/priorities of the team that is your customer in working together. This is important as during the initial phases of building a platform it will naturally be unpolished, experience hiccups, and may need a few iterations to get right.

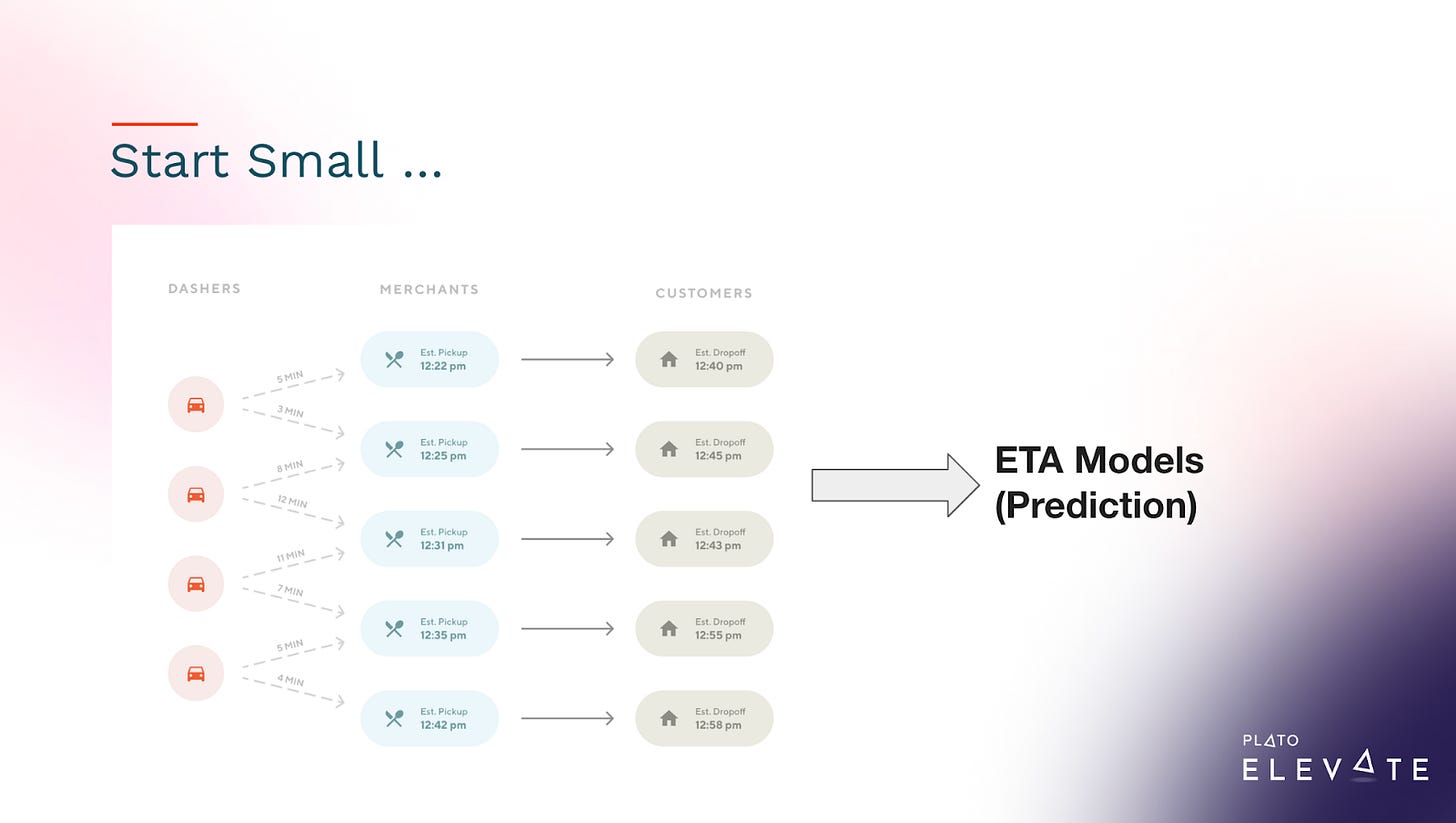

In our case at DoorDash, we chose ETA prediction (predicting the estimated time of arrival of your DoorDash delivery) as a “hero use case” based on the criteria I just mentioned, plus the fact that the ETA team was looking for platform support and were more than willing to work with us to make the project a success.

You also need a strategy for adoption, because if the first set of use cases are not that successful, later adoption by other teams and use cases will be even more difficult. So, as a platform team it's critical that we provide a great customer experience to the teams that take on the burden of being our first customers.

We started with the ETA prediction team and decided to focus on scalability, reliability, and efficiency. What was it going to take in terms of cost? Then we had to make a decision about whether to go with a centralized service or a service for every model. These are all technical decisions that need to be made.

Once you’ve chosen your use case, you need to start making technology choices (in our case, centralized prediction model, prediction batching, and model shadowing).

Success in this case would mean that the customer team that you decided to onboard on to your fledgling early platform is deployed successfully, provides the business impact that it was planned to provide, and that the experience of building it and deploying it on the platform was a good investment that will bear fruit many times over as you iterate these use cases and add to them.

If you make one team successful and get them bought into the merit of your project, they’ll be willing to sing your praises. Then it’s a question of how you can sell it to the rest of your organization.

As a quick recap, remember to dream big, but start small. You can identify hundreds of use cases, but I’d recommend starting with that one hero use case, working with the one team that’s willing to collaborate with you, and then figuring out the adoption strategy—who are you going to sell it to next?

Step 3: Get 1% better every day

This final step sounds simple: Get 1% better every day. But how do we actually do that? It’s about iterating the architecture and learning.

Let’s take an example of search and discovery.

If you search for “Italian food,” you can see the number of predictions that need to be done from a personalization recommendation perspective is two or three orders of magnitude higher than the previous example of ETAs because there are going to be millions of orders per day, but there will be even more people who scroll and search.

So what does that translate to? From an engineering perspective, this means that the feature store is going to increase in volume substantially, as shown in the chart below.

So we came up with a list of requirements and just went with the easiest feature store we could think of, which was Redis. And Redis has great qualities—it can act as a cache, it’s low latency. You can read our blog post on this decision here.

But you’ve got to do optimizations there.

If there are millions of key value pairs, it’s not going to work out well from a scalability perspective. We created a list like the one below, compressed them, and stored them.

We ended up reducing the cost by ⅓ and CPU utilization by 38%, which is fantastic.

Are we done? No! That’s where the 1% iteration keeps coming around.

In mid-2022, we discovered that while Redis was a fantastic solution, it had some problems, like uptime and auto scaling up or down. And that’s where working with CockroachDB or CRDB came to the rescue.

Check out this blog to learn more about why we needed CockroachDB in addition to Redis.

Fast forward to today: We do about 5 million predictions per second and we moved away from a centralized architecture into more of a multi-tenant architecture.

The point is the architecture will evolve—as it should. You have to keep getting 1% better. So if you were to look at the DoorDash blog today, you’d see there are hundreds of use cases we’ve enabled.

What I’d like to impart here is to not be afraid of making incorrect choices. For most problems at a very high scale, it's hard to get everything right the first time. So instead of hunting around for the best solution that will take a long time to build, go for the best solution that you can iterate on over a period of time.

The example I’ve shared here—our journey from Redis to CockroachDB and likely on to something else in the future—has been one of regular iteration.

A platform (whether internal or external) can never solve 100% of the possible use cases. Hence, when new use cases arrive that need new features to be built in the platform, one way to see if that investment is defendable or appropriate is to see how many other teams or use cases will benefit from the missing feature that needs to be built.

This is also a way of hedging your bets as a platform. It’s often hard to guess which product use cases will have a long life and thrive. For example, maybe there is a hypothetical use case that requires OCR capabilities in the platform for some restaurant menus. Is this a good investment? Would any other upcoming use cases require OCR capabilities?

Key learnings and takeaways

To summarize the key learnings I’ve shared:

Know your game. Don’t bring your soccer ball to a basketball game and vice versa.

Dream big, but start small. Identify the hero use case. Make them successful. Then figure out a scaling adoption strategy.

Get 1% better every single day. Iterate Architecture and scale. Add more sophisticated use cases

In addition to these key points, I also have a few of my own learnings to share.

The customer is key

This is not just for external customers—it’s true for internal customers, too. Internal customers all have expectations. In a large company where there are multiple organizations, sub teams, etc., it’s never easy to satisfy everyone. It’s also hard to align everyone’s needs and timelines. Hence, the best tip is to understand not just the technical needs of the platform solution sought, but also to understand the stakeholder investments, involvement, support etc.

And the SLI/SLOs are not the same for everyone. The ads use case is different from support, fraud, etc. You have to understand that prioritization becomes very important. The number of asks that come your way is huge compared to the number of people you have to solve that problem.

Another tip is that “loose agreements” work when everything is running smoothly. When there are outages, loss of business, loss of revenue, etc. then a platform team will surely find itself in the hot seat. Hence it's very important to have very clear observability/operational readiness and agreements in the form of SLOs and SLAs with the customers of your Platform. Typical SLOs include reliability mostly in the form of uptime and latency. For example, “The Inference service will guarantee an uptime of 99.9% and a 99.95 percentile latency of 5 ms for models in the size bucket of ‘XL.’”

It’s a highly evolving space

AI/ML is a vastly evolving space. Thankfully, there are plenty of resources—mostly free—that can be used to stay up to date. There are many podcasts (like TWIML AI Podcast), YouTube channels, people to follow on Twitter/X, Medium newsletters, etc. But for practitioners who are looking to build or operate an internal ML platform, my top recommendation is to read the tech blogs of other companies in a similar field. Of course, I’d be remiss not to mention the DoorDash Tech Blog, which has a wide array of articles that will help anyone interested in following our journey.

You’ll face many decisions and challenges

Not a month goes by without a vendor coming by with a new solution. You have to make decisions like centralized platforms vs. distributed ownership or how to handle volume of support. When you’re successful, there will be even more demands and expectations on you.

One tool I find especially helpful with decision-making is the framework of “one-way doors and two-way doors,” which is often attributed to Jeff Bezos of Amazon. Essentially, two-way doors are decisions that are easy to go back and change if you decide you made a mistake. Before you make a decision, evaluate if the solution is reversible or not. There will likely never be a “right” decision that stands the test of time given evolving needs. However, the amount of time and effort put in taking a decision should simply be based on whether that decision/solution can be practically and effectively reversed if need be.

Closing note: The future of AI/ML at DoorDash

Finally, a quick note about the future of AI/ML at DoorDash. One area we’re looking into is voice ordering. This is still a very green field solution. While the simple use cases are easier to deploy, we will need to iterate longer for a cost-effective, close to accurate production grade system. And customer agents, ads/promotions (for interesting visuals generated by generative AI), etc. are a few other interesting use cases we may be exploring in the near future.

If any of these topics (or any others I’ve covered here) are of interest to you, I’d encourage you to follow the DoorDash Tech Blog for all the latest updates from our teams.

And that’s a wrap! Thanks to Sudhir for sharing behind the curtain strategies to build an AI/ML platform at scale!

Cheers,

Quang & the team at Plato

Huge takeaway from Sudhir's talk:

Dream big, start small—Extreme programming at its core!

And the emphasis on CockroachDB over Redis. Yes! Finally competition among the "boring, stable tech" giants

Getting 1% better everyday 🫰